The Challenge

What’s the challenge?

Making your thinking visible in writing is a critical competence. The ability to communicate and debate ideas coherently and critically is a core graduate attribute. In many disciplines, writing provides a significant window into the mind of the student, evidencing mastery of the curriculum and the ability to reflect on one’s own learning. Arguably, in the humanities and social sciences, writing is the primary source of evidence. Moreover, as dialogue and debate move from face-to-face to online in a variety of genres and digital channels, discourse shifts from being ephemeral to persistent, providing a new evidence base.

Learning to do this is tough, and feedback is expensive to give. However, while all the evidence shows that timely, personalised feedback is one of the key factors impacting learning, and students consistently request quicker, better feedback, assessing writing is extremely time-consuming — whether a brief first assignment, a draft essay, a thesis chapter, or a research article in preparation for peer review. Moreover, no academic can provide detailed feedback on draft after draft, 24/7, to hundreds of students. This is the niche that automated feedback can fill, to complement the expert assessment that only skilled academics can provide.

How does AcaWriter work?

How does AcaWriter work?

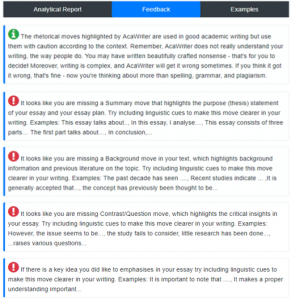

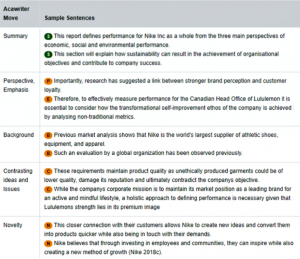

Students learn that critical, reflective, academic writing must make their thinking visible. This is conveyed through particular linguistic forms, which are hallmarks of academic writing. AcaWriter can identify some of these patterns, and provide instant feedback to help students improve their drafts.

The niche for automated feedback. This is the focus of the long-term Academic Writing Analytics (AWA) project. CIC is evolving a formative feedback app for academic writing which we call AcaWriter, working in close partnership with academics from diverse faculties, HELPS and IML. This Natural Language Processing (NLP) tool identifies the metadiscourse corresponding to rhetorical moves. These moves use linguistic cues to signal to the reader that a scholarly, knowledge-level claim is being made, but UTS practice and the wider research literature evidence how difficult this is for students to learn, and indeed, for some educators to teach and grade with confidence.

The niche for automated feedback. This is the focus of the long-term Academic Writing Analytics (AWA) project. CIC is evolving a formative feedback app for academic writing which we call AcaWriter, working in close partnership with academics from diverse faculties, HELPS and IML. This Natural Language Processing (NLP) tool identifies the metadiscourse corresponding to rhetorical moves. These moves use linguistic cues to signal to the reader that a scholarly, knowledge-level claim is being made, but UTS practice and the wider research literature evidence how difficult this is for students to learn, and indeed, for some educators to teach and grade with confidence.

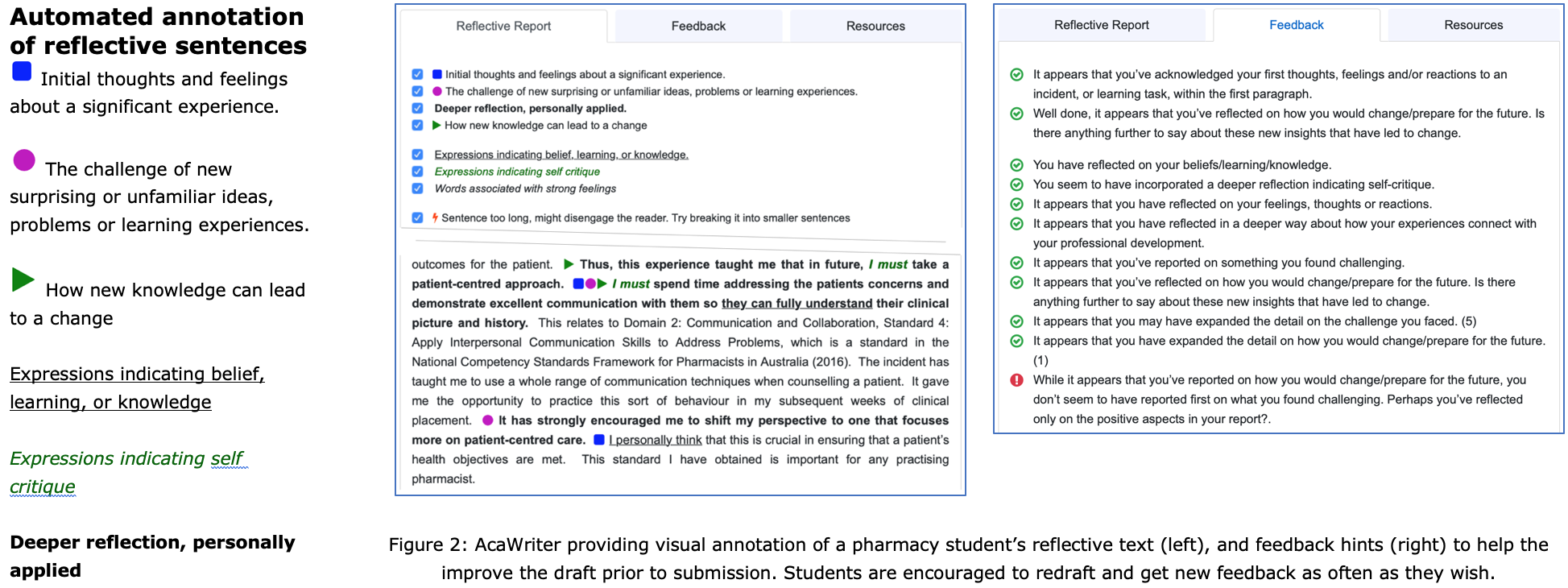

Genres of writing. There is quite a diverse range of writing at university. AcaWriter handles 2 broad classes, which we call analytical and reflective. All the examples so far illustrate analytical writing, so here’s what reflective feedback looks like, which you can see has completely different moves:

These are all explained in the orientation website for students and staff.

This is not automated grading, but rapid formative feedback on drafts. A few countries use technology for automated grading at primary and high school level, especially for high stakes exams, but this is not what we’re talking about here. AcaWriter is designed to make visible to learners the ways in which they are using (or failing to use) language to ‘make their thinking visible’, so that they gradually internalise this. A series of pilots has been conducted since 2015, and you can learn about staff and student reactions to it, and its impact on writing, in the peer reviewed research papers below.

How is AcaWriter used @UTS?

How is AcaWriter used @UTS?

On the orientation website is a section with examples of how AcaWriter has been integrated into UTS degree programs, for instance…

|

Improving pharmacy students’ reflective writing |

|

Improving Business Report Writing (Accounting) |

|

Improving sample text plus peer discussion (Civil Law) |

|

Improving research abstracts/intros |

How do I get started?

How do I get started?

Access to AcaWriter

UTS: AcaWriter is a web-based application, integrated and supported as a UTS learning technology. It is therefore available to all staff and students, just sign in using your UTS credentials.

External: There’s a free demo site for anyone to experiment (stripped down features, no documents saved, no educator tools for creating assignments). To find more access options click on “Access AcaWriter: Current and Future Options” tab.

Training

Since AcaWriter is a novel kind of tool that isn’t the same as the usual spelling and grammar checkers, we ask everyone to visit the orientation website for students and staff.

The CIC team runs monthly LX.lab briefings for UTS teaching teams, introducing the tool’s rationale, and how it can be integrated effectively into the student experience, which is a key, evidence-based approach that we have developed in close partnership with UTS academics since 2015.

We work 1-1 with academics who want to introduce it into the subjects they coordinate, including designing action research to gather evidence of the impact, which is often published as peer reviewed research.

We strongly recommend the free tutorial on UTS Open by CIC doctoral researcher Sophie Abel, on Writing an Abstract. In just an hour, with lots of multimedia examples, you learn about the key moves you have to make when writing a good research abstract (which translates well to the executive summary for many kinds of analytical report). Once you complete this tutorial, there’s a link through to the full version of AcaWriter.

Get in touch

Don’t hesitate to email cic@uts.edu.au with “AcaWriter enquiry” in the Subject line.

Underpinning research

Underpinning research

AcaWriter is research-informed, and CIC has been gathering evidence of the factors that influence its value to educators and students since it was first deployed in 2015.

(an account of the “micro-ethics” involved in designing an AI feedback tool) Knight, S., Shibani, A., & Buckingham Shum, S. (2023). A reflective design case of practical micro-ethics in learning analytics. British Journal of Educational Technology, 54, 1837–1857. https://doi.org/10.1111/bjet.13323

(a strategy to promote deeper student engagement with the feedback) Shibani, A., Knight, S. and Buckingham Shum, S. (2022). Questioning learning analytics? Cultivating critical engagement as student automated feedback literacy. Proceedings LAK’22: International Learning Analytics and Knowledge Conference (online). ACM: New York, 326–335. https://doi.org/10.1145/3506860.3506912 [eprint] [Nominated: Best Paper]

(building a model of the depth of reflective writing) Liu, M., Kitto, K., & Buckingham Shum, S. (2021). Combining factor analysis with writing analytics for the formative assessment of written reflection. Computers in Human Behavior, 120, 106733. https://doi.org/10.1016/j.chb.2021.106733

(pharmacy reflective writing on research experiences) Lucas, C., Buckingham Shum, S., Liu, M., & Bebawy, M. (2021). Implementing AcaWriter as a Novel Strategy to Support Pharmacy Students’ Reflective Practice in Scientific Research. American Journal of Pharmaceutical Education, 8320.

(first application to medical students’ reflections) Hanlon, C. D., Frosch, E. M., Shochet, R. B., Buckingham Shum, S. J., Gibson, A., & Goldberg, H. R. (2021). Recognizing Reflection: Computer-Assisted Analysis of First Year Medical Students’ Reflective Writing. Medical Science Educator, 31(1), 109-116. http://doi.org/10.1007/s40670-020-01132-7

(overview of 5 years research, joint with academics from Law, Accounting and Pharmacy) Knight, S., Shibani, A., Abel, S., Gibson, A., Ryan, P., Sutton, N., Wight, R., Lucas, C., Sándor, Á., Kitto, K., Liu, M., Mogarkar, R. & Buckingham Shum, S. (2020). AcaWriter: A learning analytics tool for formative feedback on academic writing. Journal of Writing Research, 12, (1), 141-186.

(insights into the academics’ experience) Shibani, A., Knight, S., Buckingham Shum S. (2020). Educator Perspectives on Learning Analytics in Classroom Practice. The Internet and Higher Education, Volume 46. Available online 20 February 2020.

Buckingham Shum, S. and Lucas, C. (2020). Learning to Reflect on Challenging Experiences: An AI Mirroring Approach. Proceedings of the CHI 2020 Workshop on Detection and Design for Cognitive Biases in People and Computing Systems, April 25, 2020. [slides]

Knight, S., Abel, S., Shibani, A., Goh, Y. K., Conijn, R., Gibson, A., Vajjala, S., Cotos, E., Sándor, Á. and Buckingham Shum, S. Are You Being Rhetorical? An Open Corpus of Machine Annotated Rhetorical Moves. Journal of Learning Analytics, 7, 3 (12/17 2020), 138-154.

Shibani, A. (2019). Augmenting pedagogic writing practice with contextualizable learning analytics. Doctoral Dissertation, Connected Intelligence Centre, University of Technology Sydney, AUS.

(students’ responses to reflective writing feedback) Lucas, C., Gibson, A. and Buckingham Shum, S. (2019). Pharmacy Students’ Utilization of an Online Tool for Immediate Formative Feedback on Reflective Writing Tasks. American Journal of Pharmaceutical Education, 83 (6) Article 6800. [PDF]

(aligning writing analytics with learning design) Shibani, A., Knight, S., Buckingham Shum S. (2019). Contextualizable Learning Analytics Design: A Generic Model and Writing Analytics Evaluations. In Proceedings of the International Conference on Learning Analytics and Knowledge (LAK’19). ACM, New York, NY, USA. [PDF]

(first results from machine learning) Liu, M., Buckingham Shum, S., Mantzourani, E. and Lucas, C. (2019). Evaluating Machine Learning Approaches to Classify Pharmacy Students’ Reflective Statements. Proceedings AIED2019: 20th International Conference on Artificial Intelligence in Education, June 25th – 29th 2019, Chicago, USA. Lecture Notes in Computer Science & Artificial Intelligence: Springer.

(writing research abstracts): Abel, S., Kitto, K., Knight, S., Buckingham Shum, S. (2018). Designing personalised, automated feedback to develop students’ research writing skills. In Proceedings Ascilite 2018

Knight, S., Shibani, A. and Buckingham Shum S. (2018). Augmenting Formative Writing Assessment with Learning Analytics: A Design Abstraction Approach. London Festival of Learning (Tri-Conference Crossover Track), London (June 2018).

Shibani, A., Knight, S., Buckingham Shum S. (2018). Understanding Revisions in Student Writing through Revision Graphs. Poster presented at the 19th International Conference on Artificial Intelligence in Education (AIED’18).

Extended version: Antonette Shibani, Simon Knight and Simon Buckingham Shum (2018). Understanding Students’ Revisions in Writing: From Word Counts to the Revision Graph . Technical Report CIC-TR-2018-01, Connected Intelligence Centre, University of Technology Sydney.

Shibani, A. (2018). Developing a Learning Analytics Intervention Design and tool for Writing Instruction. In Companion Proceedings of the Eighth International Conference on Learning Analytics & Knowledge (LAK ’18), Sydney, Australia

Shibani, A. (2018). AWA-Tutor: A Platform to Ground Automated Writing Feedback in Robust Learning Design (Demo). In Companion Proceedings of the Eighth International Conference on Learning Analytics & Knowledge (LAK ’18), Sydney, Australia.

(first major case study in analytical writing): Knight, S., Buckingham Shum, S., Ryan, P., Sándor, Á. and Wang, X. (2018). Designing Academic Writing Analytics for Civil Law Student Self-Assessment. International Journal of Artificial Intelligence in Education, 28, (1), 1-28. (Part of a Special Issue on Multidisciplinary Approaches to AI and Education for Reading and Writing – Parts 1 & 2. Guest Editors: Rebecca J. Passonneau, Danielle McNamara, Smaranda Muresan, and Dolores Perin)

Shibani, A., Knight, S., Buckingham Shum S. and Ryan, P. (2017). Design and Implementation of a Pedagogic Intervention Using Writing Analytics. In Proceedings of the 25th International Conference on Computers in Education. New Zealand: Asia-Pacific Society for Computers in Education

(foundations for reflective writing): Gibson, A., Aitken, A., Sándor, Á., Buckingham Shum, S., Tsingos-Lucas, C. and Knight, S. (2017). Reflective Writing Analytics for Actionable Feedback. Proceedings of LAK17: 7th International Conference on Learning Analytics & Knowledge, March 13-17, 2017, Vancouver, BC, Canada. (ACM Press). [Preprint] [Replay] Awarded Best Paper.

Buckingham Shum, S., Á. Sándor, R. Goldsmith, R. Bass and M. McWilliams (2017). Towards Reflective Writing Analytics: Rationale, Methodology and Preliminary Results. Journal of Learning Analytics, 4, (1), 58–84.

This is an extended version of: Buckingham Shum, S., Á. Sándor, R. Goldsmith, X. Wang, R. Bass and M. McWilliams (2016). Reflecting on Reflective Writing Analytics: Assessment Challenges and Iterative Evaluation of a Prototype Tool. 6th International Learning Analytics & Knowledge Conference (LAK16), Edinburgh, UK, April 25 – 29 2016, ACM, New York, NY. [Preprint] [Replay]

(perspectives from some of the world’s leading teams) Critical Perspective on Writing Analytics. Workshop, 6th International Learning Analytics & Knowledge Conference (LAK16), Edinburgh, UK, April 25, 2016. http://wa.utscic.edu.au/events/lak16wa

Simsek, D., Á. Sándor, S. Buckingham Shum, R. Ferguson, A. D. Liddo and D. Whitelock (2015). Correlations between automated rhetorical analysis and tutors’ grades on student essays. Proceedings of the Fifth International Conference on Learning Analytics And Knowledge, Poughkeepsie, New York, ACM.

Access AcaWriter: Current and Future Options

Thank you for your interest in AcaWriter. Below we outline options for accessing it, and invite you to subscribe to receive different kinds of updates.

Free demo site

A free demo site allows anyone to experiment (stripped down features, no documents saved, no educator tools for creating assignments).

Bundled with this free writing course

CIC Doctoral Researcher Sophie Abel specialises in academic writing, and has published a free course on UTS Open, entitled Writing an Abstract. This is about an hour’s tutorial introducing the hallmarks of a good abstract, including a wide range of engaging interactive exercises. The course is based on Sophie’s PhD who brings her expertise in Academic Language & Learning to the challenge of designing automated feedback on writing. Learners signed into this Canvas course can access AcaWriter to test their understanding of the concepts.

Install the open source platform

For institutions wishing to host their own version of AcaWriter and the underlying systems, there is an open source release. This has the advantage that the entire system is within your institutional environment. However, you will need someone technical (e.g., a SysOps Admin) who understands these kind of instructions, and once installed, a web developer who can replace the UTS elements of the user interface with your own details.

- For larger scale usage with students you will likely need to involve your IT team. At UTS we run this as an approved platform hosted securely in our institutional cloud, with single sign-on for students and staff. We can provide details of how we do this on request.

- For research purposes, if you’re not technical, you might partner with your computer science/data science academic colleagues. They are likely to have the expertise and hosting facilities for advanced platforms such as this.

Express interest in a hosted solution

We recognise that this is advanced technology which will be unfamiliar to many educational IT divisions. We therefore invite you to confirm if you would be interested in licensing a Software-as-a-Service (SaaS) solution, whereby you license access to the tool which we host. This is an option we are considering, and it helps our planning to know of your interest.

Express interest in API services

A hosting variation is that UTS runs the back-end text analytics services (which do not store student texts after processing), and you install the much simpler AcaWriter web application in your institutional environment. This brings the advantage of institutions storing your own student data locally, which may be a data protection requirement.